Solutions

From Agentic Workflows

to the AI Operating System

Why Arpia Is Building What Comes After the Agentic-AI Era

The Problem with Today’s AI Hype

AI has never been more visible. From viral agents to plug-and-play copilots, the world is fascinated with intelligent tools. But beneath the buzz, the truth is simple: most AI workflows today are stitched together with duct tape. You get an LLM here, a script there, maybe a dashboard and a vector DB. None of them talk deeply to each other. Memory is ephemeral. Context dies between requests. The outputs might be pretty, but the reasoning is fragile.

It works for demos. But not for mission-critical operations.

Sometimes we fall into the trap of thinking that complexity equals sophistication. But simpler doesn’t always mean scalable.

In my experience, simplicity should serve the builder—making creation intuitive, frictionless, and fast. But under the surface, the system must remain powerful. It’s not about being simple for the sake of minimalism. It’s about doing the heavy lifting on behalf of the user. That’s the true value of any tool: to handle complexity so the creator doesn’t have to.

Introducing Multi-Contextual Prompting (MCP)

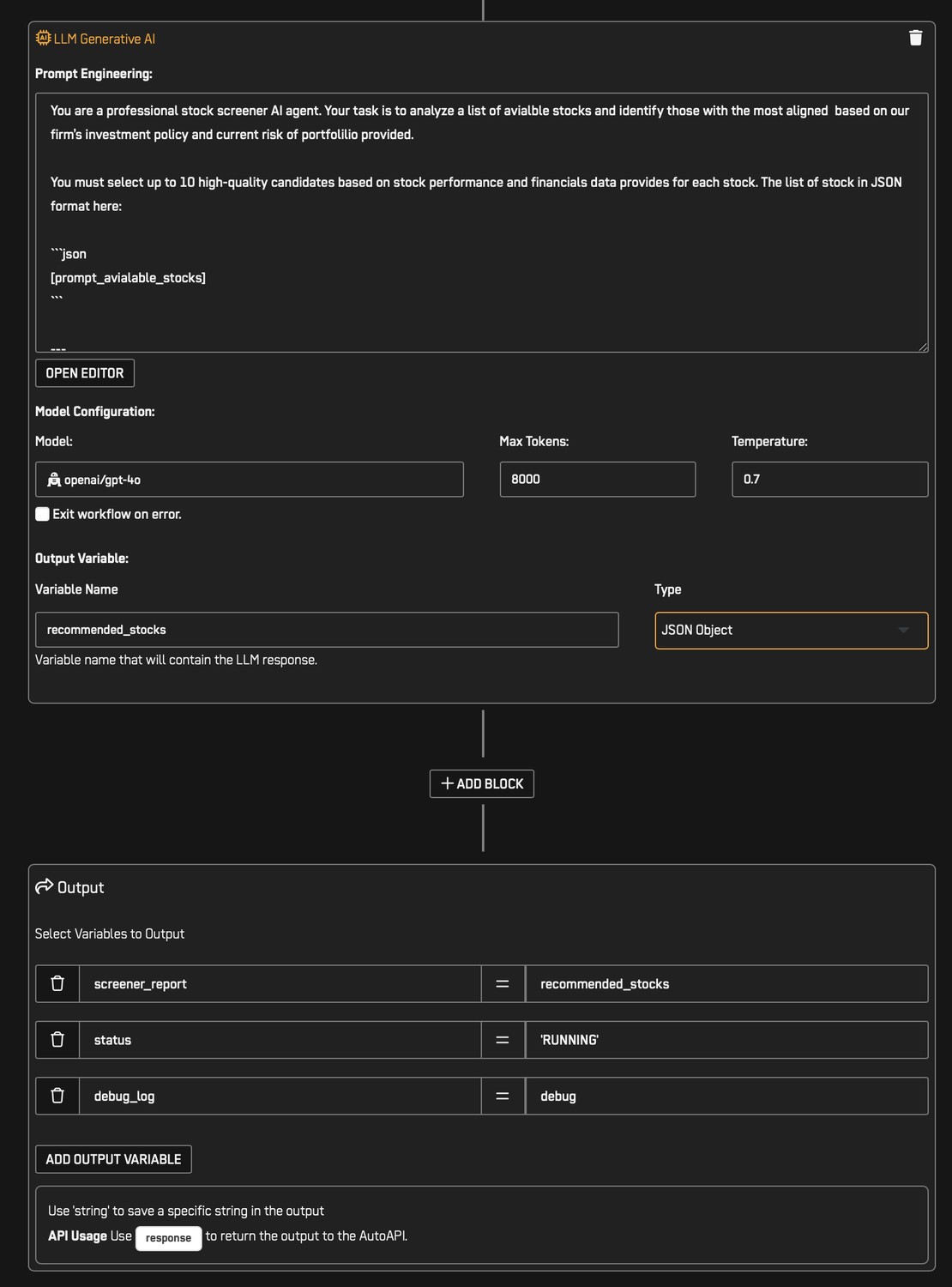

At Arpia, we pioneered Multi-Contextual Prompting (MCP) not as a prompt hack, but as a structural principle. MCP is the idea that every intelligent process must:

- Carry state and context across multiple agents.

- Be able to nest, like microservices of thought.

- Pass and receive variables, not just strings.

- Operate on data, over time, in real workflows.

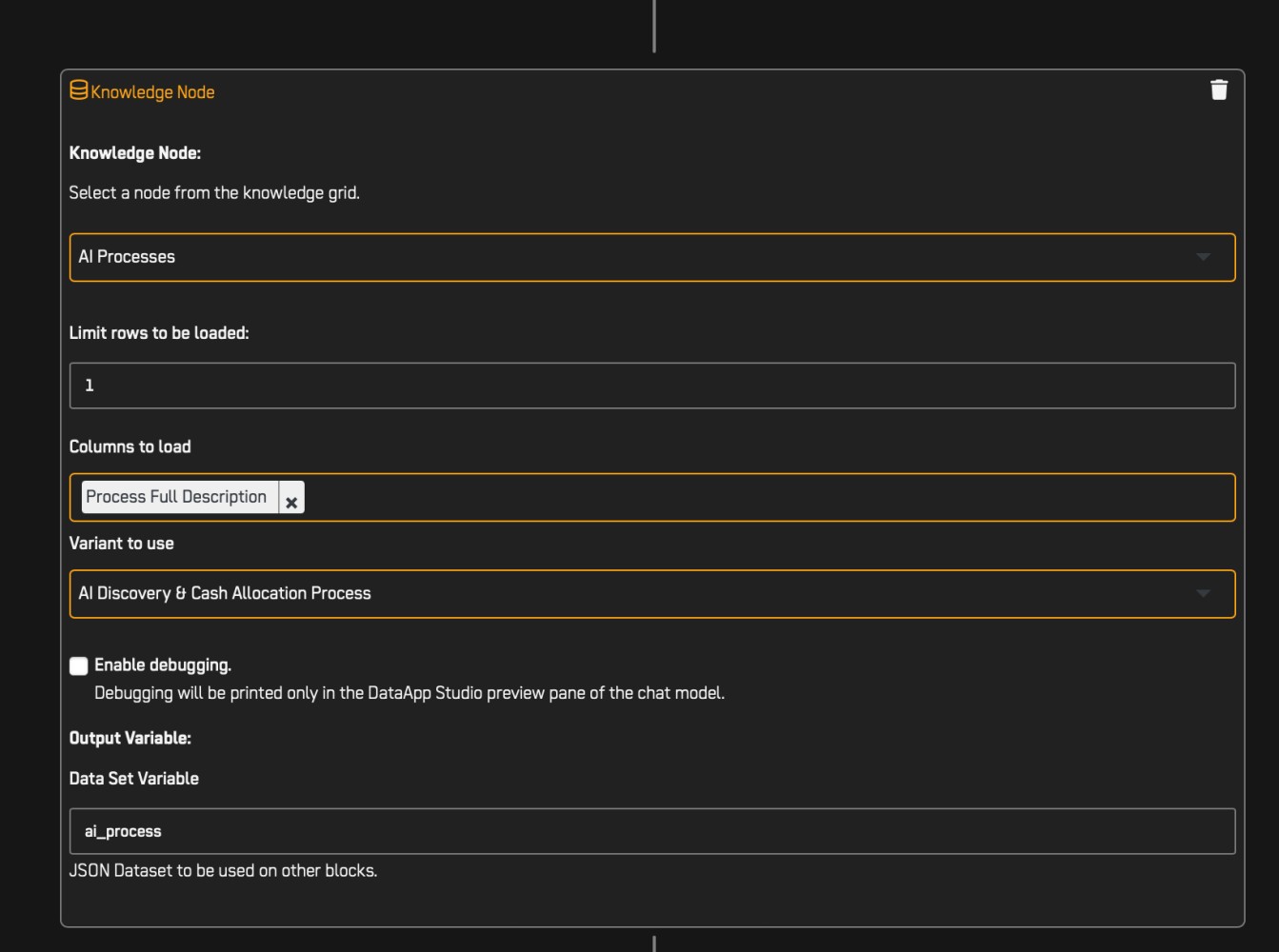

MCP is what allows a Generative Agent to pull structured context from a business process, apply reasoning, and route results to a downstream AI or human step, without breaking memory or traceability.

It’s the glue that holds true orchestration together.

Agentic MCP inside main AI Worker MCP

True access to the Knowledge Grid

Full MCP and storage and debugging memory

Agentic MCP Inside Agentic MCP

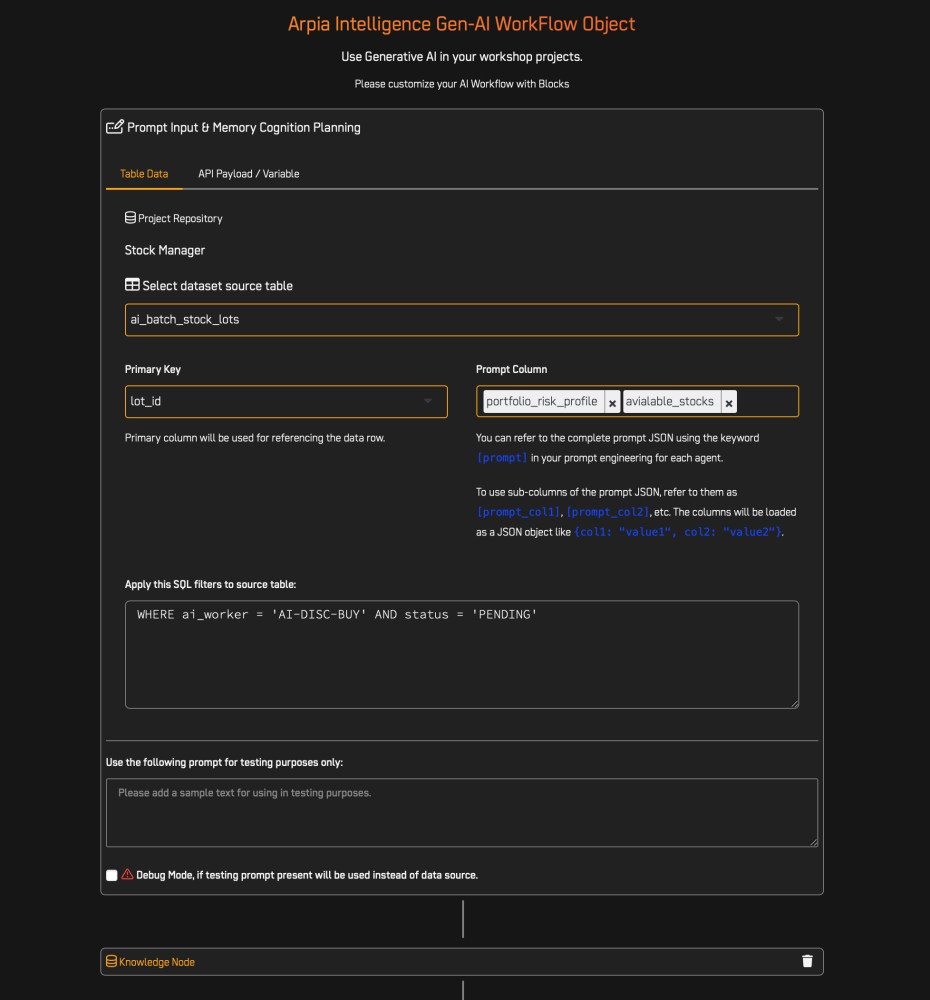

In Arpia, you don’t just run a single agent. You build agentic systems. One AI object might:

- Run SQL filters in a dataset.

- Feed cleaned variables to a prompt.

- Store memory as structured context.

- Pass that to another agent who makes a judgment.

- Return both the result and reasoning chain.

And that entire block—an autonomous Agentic Workflow—can be used inside another agent.

We don’t just support MCP. We support recursive orchestration. That’s the beginning of a true AI Operating System.

What Makes Arpia Different

We didn’t lock ourselves into an Agentic AI model or limit our vision to tools that depend solely on LLMs.

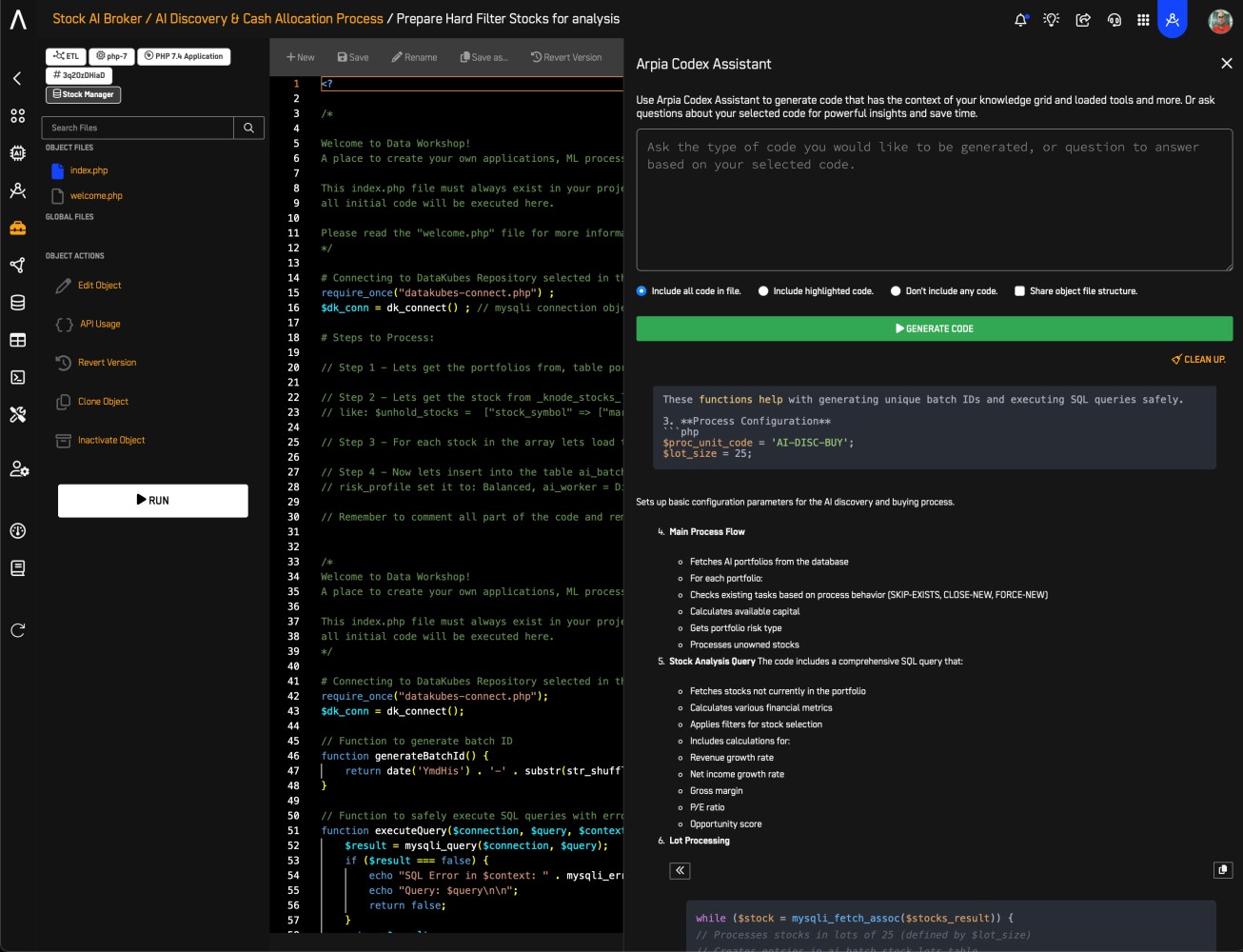

Instead, we built a foundation that scales all the way down to the simplest task—like creating an AI Codex Assistant that turns a single developer into a team of ten. This is not just automation; it’s augmentation. It’s the neural link between the builder, the unified knowledge grid, and AI—elevating both human and machine to their highest potential.

It’s not about replacing the developer; it’s about evolving them into the ultimate AI Builder—fluid across languages, empowered by orchestration, and amplified by purpose-built intelligence.

Most AI platforms stop at prompt chaining. We go far beyond:

- Full Multi-Language Execution: Combine Python, PHP, LLMs, SQL and UI logic in one coherent chain.

- Composable Intelligence: Every block is versioned, reusable, and auditable.

- Memory by Design: Our variables aren’t blobs of text. They’re typed, contextual, and persisted.

- Visual & API-Native: Build flows visually, run them from APIs. Same logic, different form.

- AI with Governance: Each action is explainable, loggable, and policy-aware.

This isn’t a prompt playground. It’s an industrial-grade AI Engine for operations.

Why This Matters for the Future

Enterprises don’t need another chatbot. They need systems that think, act, remember, and adapt. We believe:

- The next evolution is Orchestrated AI, not isolated models.

- The future belongs to AI Systems, not tools.

- And the winners will be those who master the structure of cognition, not just the interface.

We’re not building hype. We’re building infrastructure for intelligence & scale.

Why AI Engineers & Scientists Should Join Us

If you’re tired of demos and ready to build:

- Real autonomous flows

- Multi-agent orchestration

- Distributed reasoning systems

Then Arpia is where you belong. Here, LLMs aren’t endpoints. They’re collaborators in a larger system. Context isn’t lost, it’s managed. And intelligence isn’t magic, it’s engineered.

We’re building AI that creates real value—not just for the builder, but for the entire organization.

At Arpia, augmentation goes beyond productivity hacks or clever prompts. We design AI systems that embed intelligence into the fabric of an enterprise—transforming workflows, accelerating execution, and unlocking decision-making at scale. This isn’t about replacing humans; it’s about empowering every function, every role, and every process to operate at its smartest.

The AI OS Starts Here

We believe every enterprise will soon run on an AI Operating System. Not just to automate, but to reason, act, learn, and evolve.

We’re not just imagining that future. We’re building it.

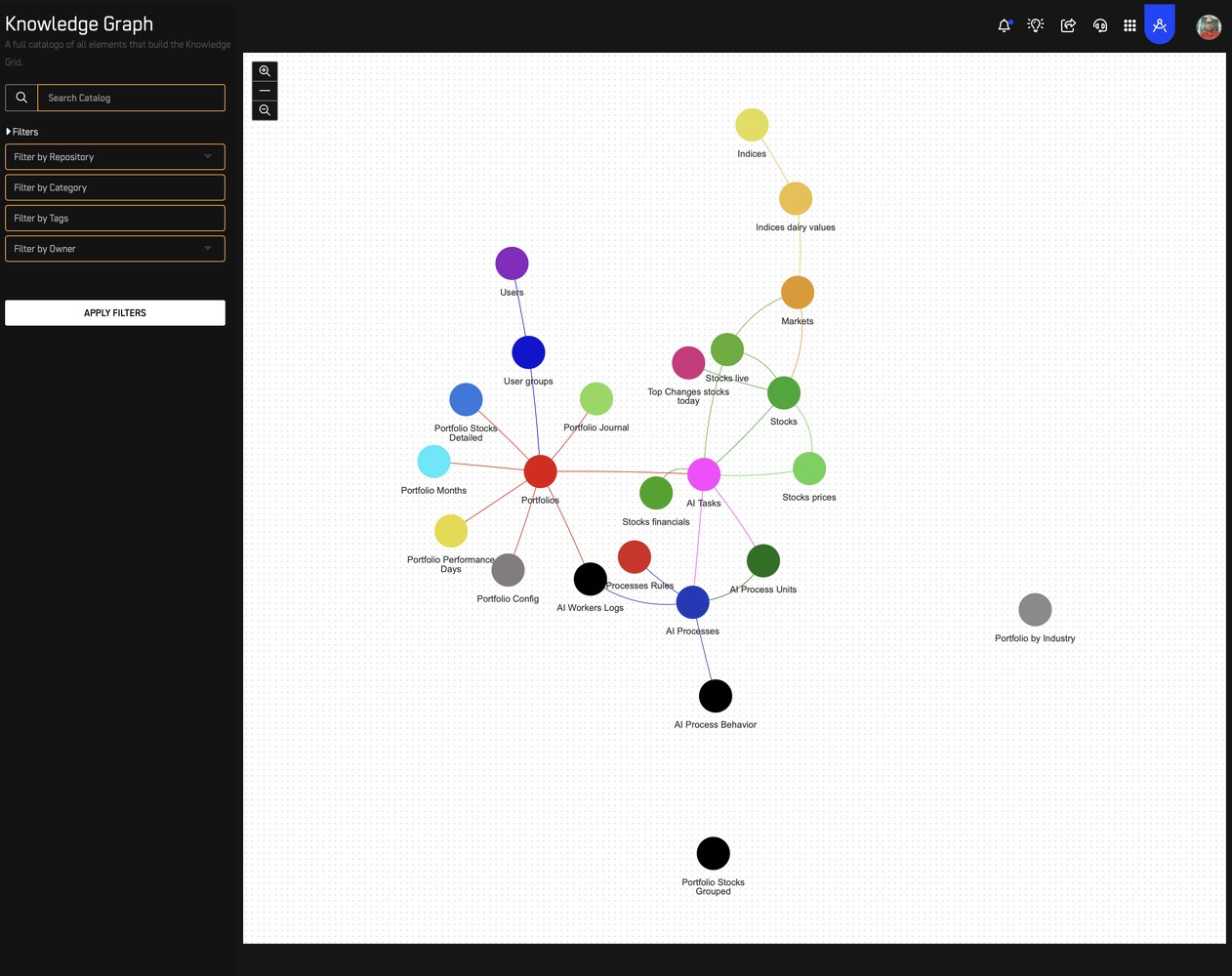

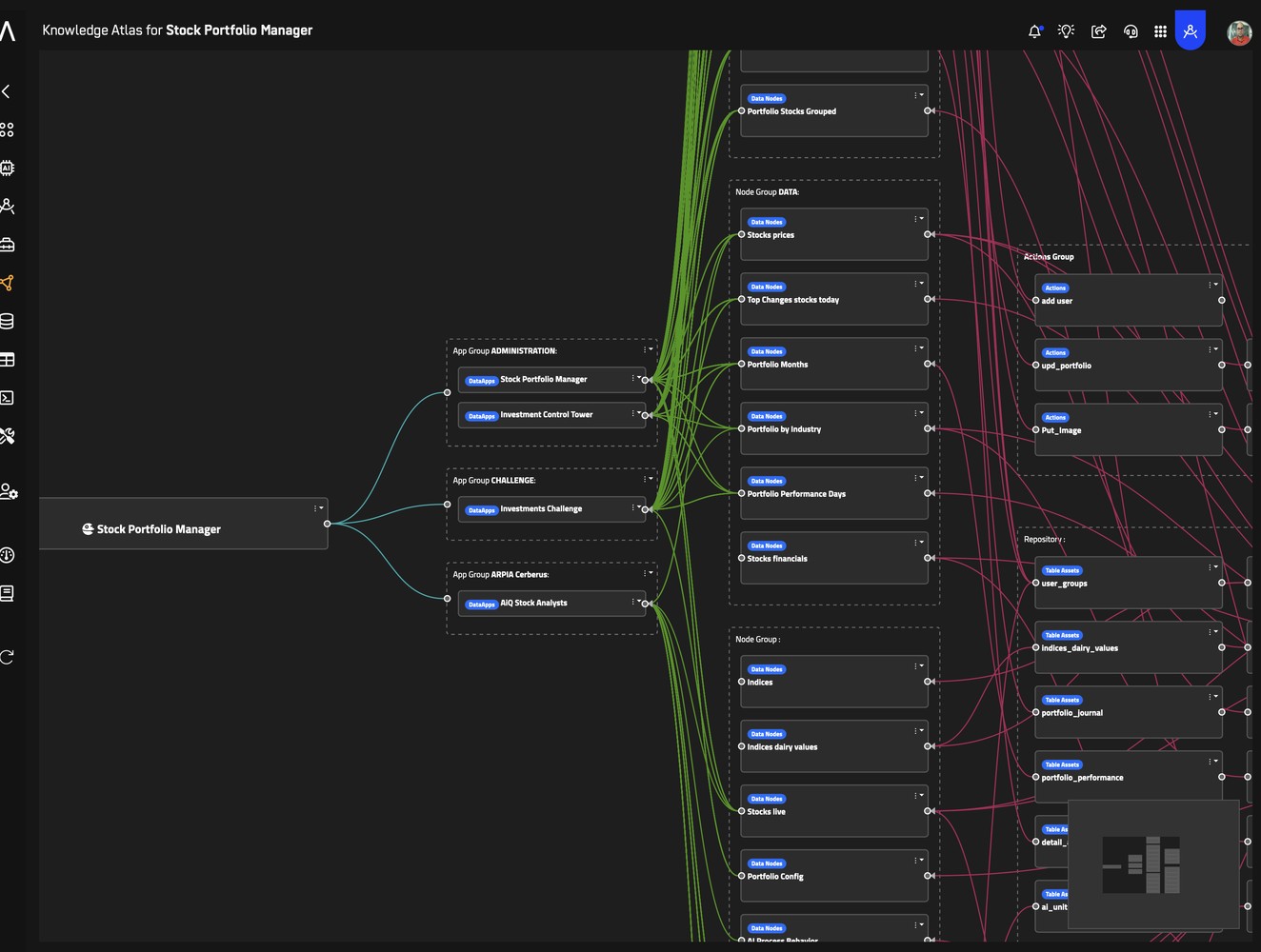

Semantic Access to The Knowledge Grid

The Arpia Atlas full AI Operating System

If you’re building what comes after the hype — we’re hiring.